18 in Which of These Scenarios Should Hadoop Be Used

Scenario-Based Hadoop Interview Questions. Q2 When is Hadoop useful for an application.

Top 40 Hadoop Interview Questions And Answers In 2022

Most everybody thinks there data is big.

. Lets take a look at these use cases from 2 overlapping perspectives technical and business. Hope these questions are helpful for you. When random data access is required.

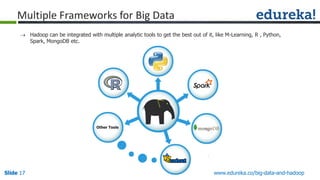

They tend to pick Hadoop if they prefer an open-source solution want better performance with less administration overhead need low latency access for interactive querying or iterative algorithms eg machine learning or require enormous file storage Performance When it comes to Big Data Hadoop promises higher performance. Hadoop Quiz 4. Organizations can dump large.

Check the same below. When random data access is required. When the application requires low latency data access.

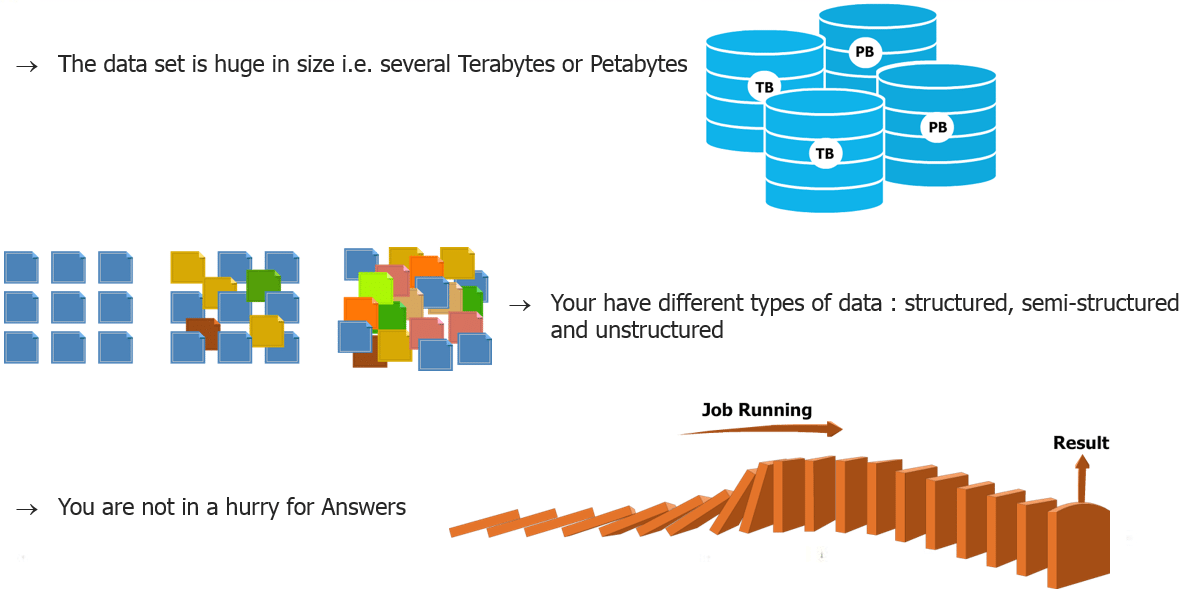

A software architect looks at the conditions organizations usually like to meet when adopting data warehouses and Hadoop to handle to their data needs. Hadoop is not appropriate for near real time interactive analysis. I used to use Hadoop to process any dataset that was around 10 GB or more which is still a bit overkill once it gets to 100 GB then you defo want something like Hadoop.

This quiz will help you to revise the concepts of Apache Hadoop and will build up your confidence in Hadoop. When all of the application data is unstructured. We know that data is increasing at a very high rate and to handle this big data it is not possible to use RDBMS and to overcome this Hadoop was introduced.

Forrester predicts CIOs who are late to the Hadoop game will finally make the platform a priority in 2015. Hadoop is a new technology designed to replace relational databases. We are in analysis phase for a project in which we are replacing old storing system to be based on hdfs and hive used for reporting and viewing.

When work can be parallelized. Five Reasons You Should Use Hadoop. Q3 With the help of InfoSphere Streams Hadoop can be used with data-at-rest as well as data-in-motion.

Binary data cannot be used by Hadoop fremework. We know you will enjoy other quizzes as well. These Hadoop interview questions specify how you implement your Hadoop knowledge and.

In which of these scenarios should Hadoop be used. The reason for asking such Hadoop Interview Questions is to check your Hadoop skills. Often you will be asked some tricky Big Data Interview Questions regarding particular scenarios and how you will handle them.

My apologies in advance as I am a complete novice in this technology although I understand databases like MySQL well. Also I will love to know your experience and questions asked in your interview. None of the options is correct.

It cannot be used as a key for example. These components are able to serve a broad spectrum of applications. From the series of 6 quizzes on Hadoop this is the 4th Hadoop Quiz.

When all of the application data is unstructured. Hadoop Quiz 3. Big data is nothing but a massive amount of data which cannot be stored processed and analyzed using our.

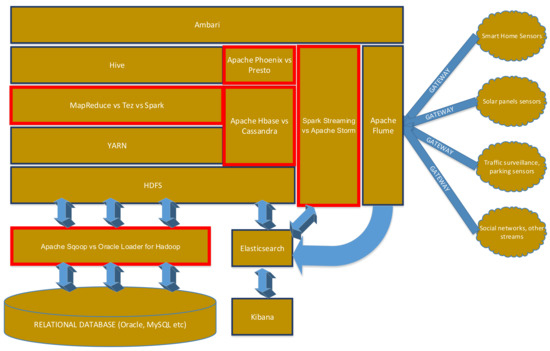

Hadoop was designed to do big batch processing of say a few hours of data plus. Hadoop ecosystem contains many components like MapReduce Hive HBase Zookeeper Apache Pig etc. But dont even think about Hadoop if the data you want to process is measured in MBs or GBs.

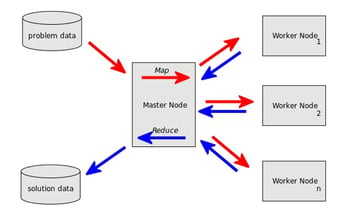

But we got a request if we can make use of hdfs to. We can use Map-Reduce to perform aggregation and summarization on Big Data. QUESTION 18 1 point possible 18 In which of these scenarios should Hadoop be from EDUC 105 at Mount Saint Vincent University.

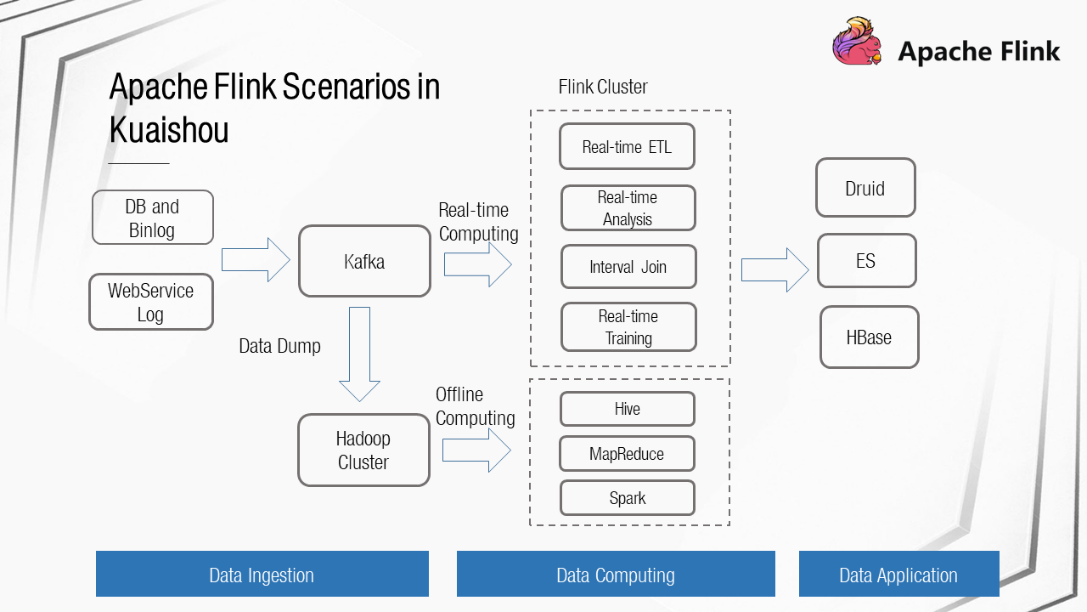

Fortunately many of these vendors quickly responded with new HDFS connectors making it easier for organizations to leverage their ETL investments in this new Hadoop world. Some went so far as to call Hadoop an ETL killer putting ETL vendors at risk and on the defense. You should also have good hands-on experience with these tools and te.

If you want to do some Real Time Analytics where you are expecting result quickly Hadoop should not be used directly. When work can be parallelized. When is Hadoop useful for an application.

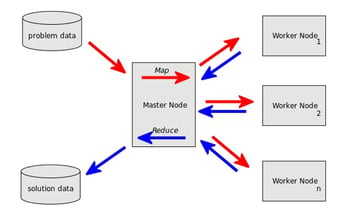

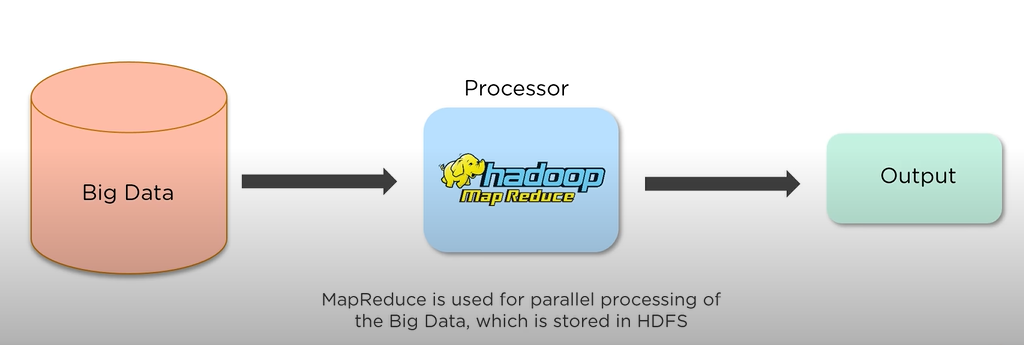

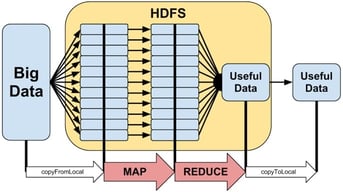

The diagram below explains how processing is done using MapReduce in Hadoop. When work can be parallelized. Answer 1 of 4.

It is because Hadoop works on batch processing hence response time is high. Hi I would like to have some expert view on the use of a Big Data platform like Hadoop in one of my project scenarios. Binary can be used in map-reduce only with very limited functionlity.

Your Data Sets Are Really Big. I will list those in this Hadoop scenario based interview questions post. Since Hadoop cannot be used for real.

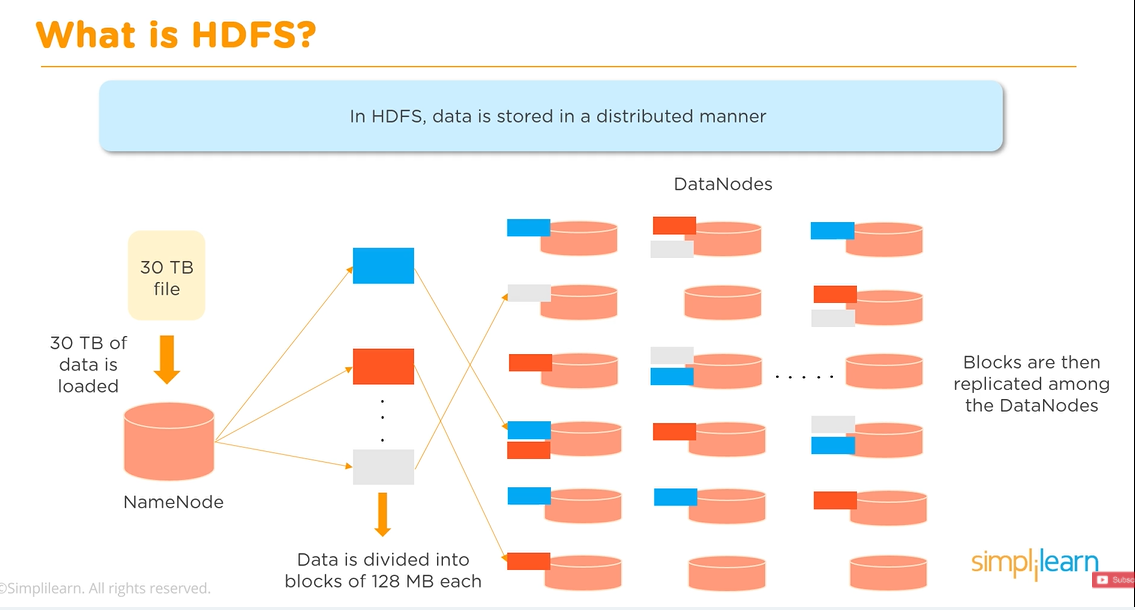

These include HDFS MapReduce YARN Sqoop HBase Pig and Hive. With the help of InfoSphere Streams Hadoop can be used with data-at-rest as well as data-in-motion. Hadoop includes open source components and closed source components.

Processing billions of email messages to perform text analytics correct. Hadoop Quiz 5. With the help of InfoSphere Streams Hadoop can be used with data-at-rest as well as data-in-motion.

Hadoop at least in its current mostly MapReduce-based incarnation imposes limitations in terms of how applications are programmed and how. When the application requires low latency data access. Staging area for Data warehouse analytics Using Hadoop as a vehicle to load data into a traditional data warehouse for data mining OLAP reporting etc and for loading data into an analytical store for advanced analytics.

You can use these Hadoop interview questions to prepare for your next Hadoop Interview. When is Hadoop useful for an application. Binary data should be converted to a Hadoop compatible format prior to loading.

Do share those Hadoop interview questions in the comment box. When the application requires low latency data access. All of the options are correct.

Hadoop can freely use binary files with map-reduce jobs so long as the files have headers. Hadoop has evolved as a must-to-know technology and has been a reason for better career salary and job opportunities for many professionals. This particular Hadoop scenario was quite popular early on.

When all of the application data is unstructured. We are creating a product which would be used to analyse d. Answer 1 of 7.

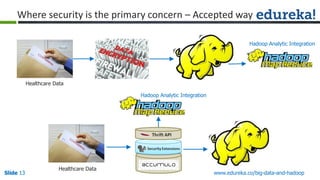

When random data access is required. Real Time Analytics Industry Accepted Way. Hive is a data warehouse project on the top HDFS.

To crack an interview for Hadoop technology you need to know the basics of Hadoop and the different frameworks used in big data to handle data.

What Is Hadoop Good For Best Uses Alternatives Tools Hostingadvice Com Hostingadvice Com

5 Scenarios When To Use When Not To Use Hadoop

Sensors Free Full Text Hadoop Oriented Smart Cities Architecture Html

Summary Of The Different Big Data Tools By Liverungrow Medium

5 Scenarios When To Use When Not To Use Hadoop

What Is Hadoop Good For Best Uses Alternatives Tools Hostingadvice Com Hostingadvice Com

Hadoop Ibm Course Certificate Exam Answers Cognitive Class Hadoop 101 Answers Everything Trending

5 Scenarios When To Use When Not To Use Hadoop

3 Ways To Use Hadoop Without Throwing Out The Dwh

When To And When Not To Use Hadoop

What Is Hadoop Good For Best Uses Alternatives Tools Hostingadvice Com Hostingadvice Com

Batch Processing On Raw Data Sets The Emr Scripts Used C1 Medium Download Scientific Diagram

3 Ways To Use Hadoop Without Throwing Out The Dwh

5 Scenarios When To Use When Not To Use Hadoop

When To And When Not To Use Hadoop

Summary Of The Different Big Data Tools By Liverungrow Medium

5 Scenarios When To Use When Not To Use Hadoop

Trillions Of Bytes Of Data Per Day Application And Evolution Of Apache Flink In Kuaishou Alibaba Cloud Community

Comments

Post a Comment